AWS Organizations with Terraform Workspaces

There are three boring things in life that DevOps engineers need to do:

- Grant the correct set of permissions per dev, so they don't explore more than they should, and don't have less than they have to;

- Replicate resources across environments;

- Watch out for costs;

And for all of those, having AWS Organizations with terraform workspaces is the way to go.

I'm about to show you how to use AWS Organizations to your advantage with meaningful examples, and how to use terraform to manage it and replicate resources across them.

This post you're reading assumes you know the minimal about AWS and Terraform. I'll mention things like IAM, SQS, and show some terraform code.

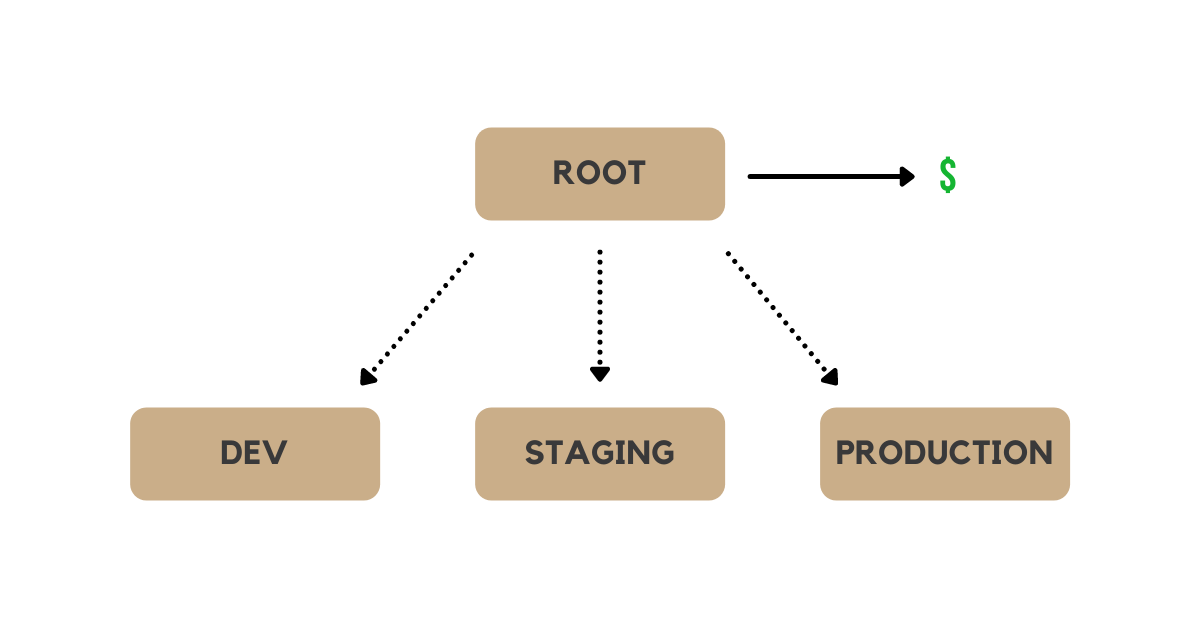

🗄️ What are AWS Organizations?

It's an AWS account that is defined as an organization and that manages children AWS accounts. The parent (or root) account is then responsible for paying the bills of these accounts.

✨ Benefits of AWS organizations

A well structured setup makes a lot of sense for:

- Different environments (i.e. staging and production)

- Specific projects that may use many resources and that require observation

- Isolated customer projects

By default, a setup for different projects will allow you to have:

Safer configuration of resources since they can't touch each other unless you explicitly allow it to. Also, it's a good incentive for you to create more VPCs, Roles, etc, instead of reusing the same.

Avoid resource naming hell by not creating, for example, 3 RDS instances named main-database-dev, main-database-staging, and main-database-production, and by not connecting services from different environments by mistake to them. All of them can perfectly have the exact same name main-database living on their isolated organization.

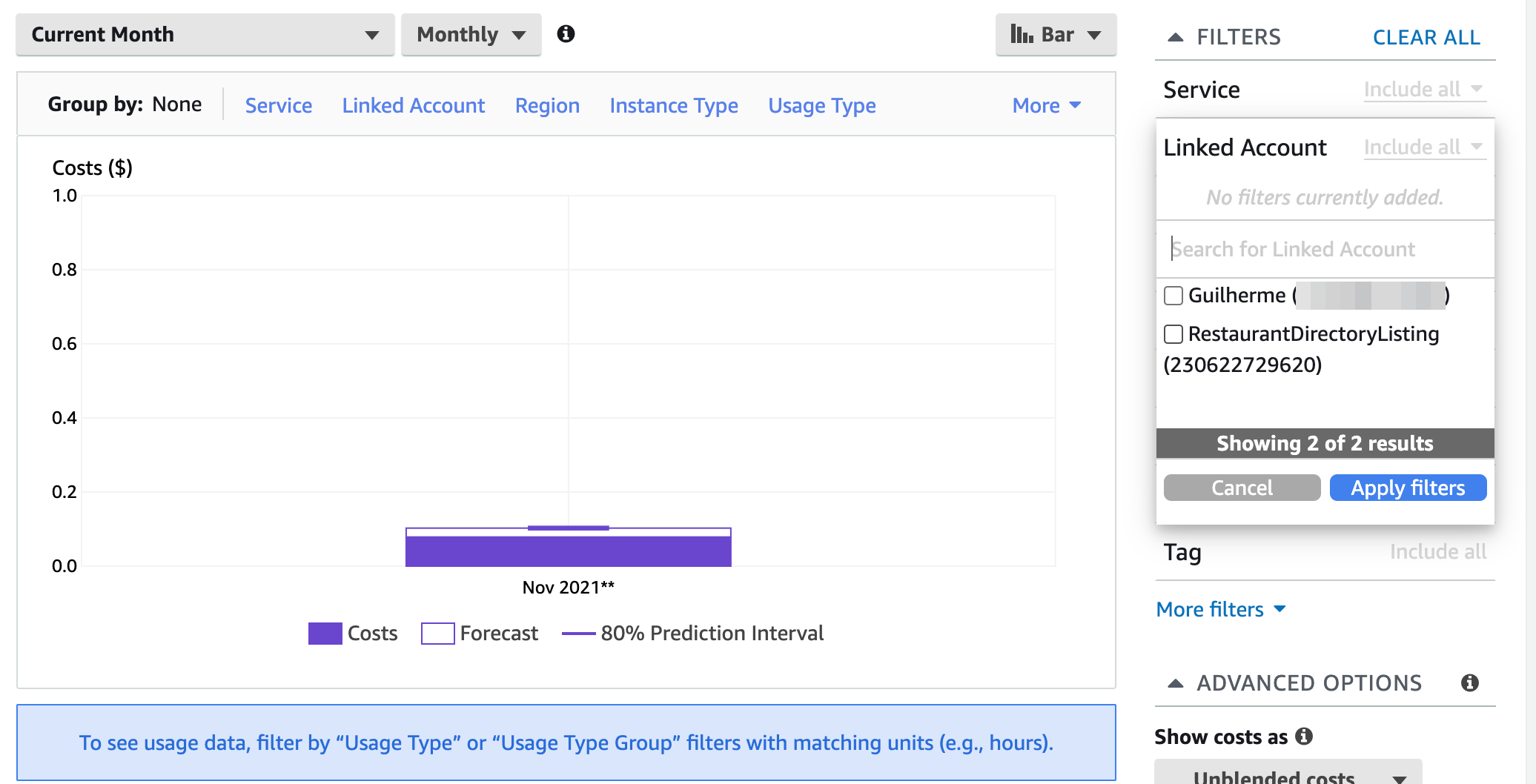

Consolidated billing of accounts, so you can understand which customer of yours consumes more resources, how much it costs you to keep a staging environment, and so on.

🔓 How do permissions and access across accounts work?

I'll use a realistic example from past places I worked.

It's common for companies to have at least three environments, and so it's good to keep them split as different organizations:

| Environment | Motivation |

|---|---|

| dev | Environment with constant changes and testing, very unstable. |

| staging | Slightly stabler environment, used frequently for testing and release candidates. |

| production | Stable environment, this is the one customers use and consume. |

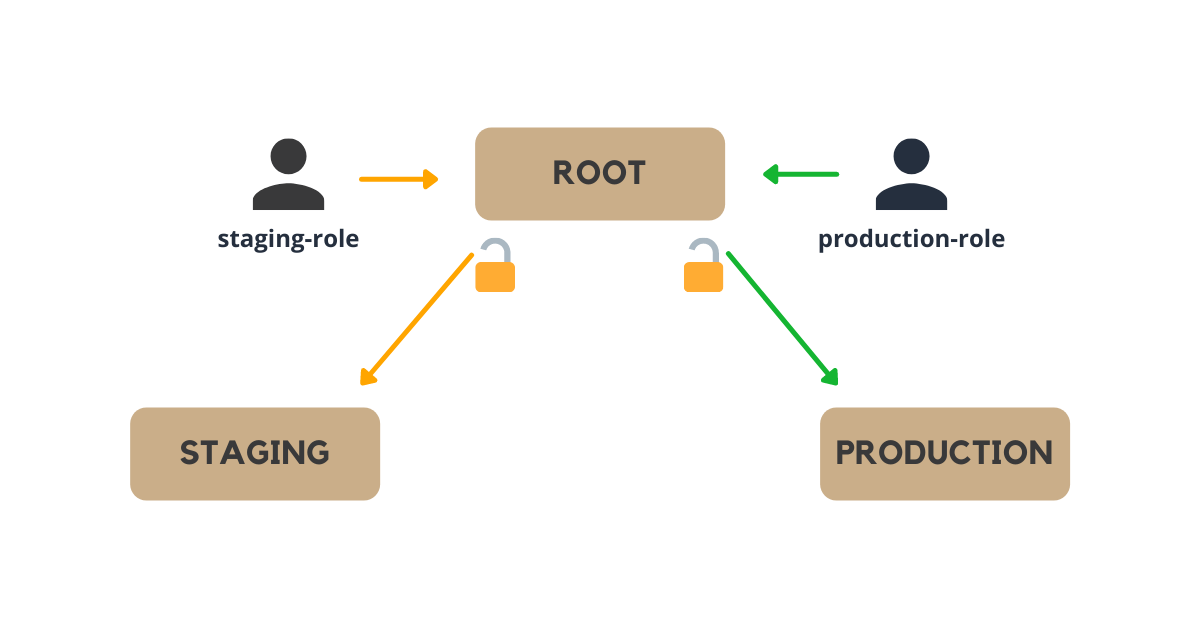

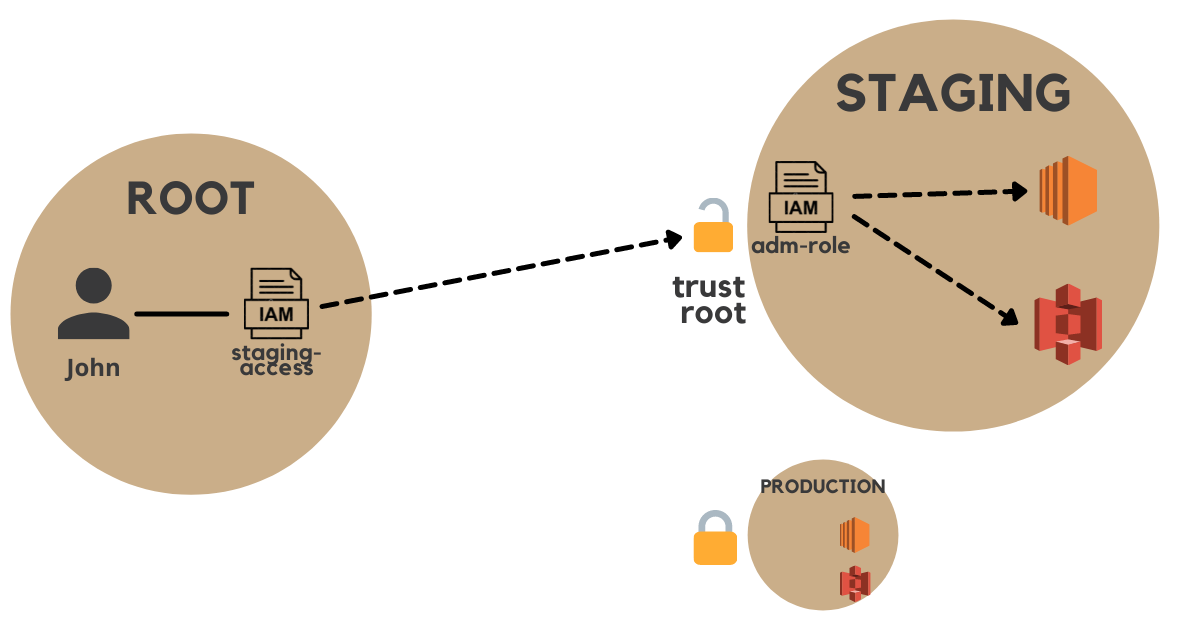

Since the organizations are different accounts with their own set of IAM, RDS, VPCs, etc the only way for the root account to interact with any of the children accounts is by assuming a role.

Note that you can, easily, create custom IAM users inside each new account as you would normally do. I don't recommend that approach because:

- Now you have to watch out for many different user accounts (i.e. all of them are rotating their secrets? Do they have MFA enabled? etc)

- Other engineers have many access key/access secrets

It's way easier (and consequently safer) to manage such access through roles because then you can limit which user from the root account can assume what role from the child account.

As soon as you created a managed child account, you need to deal with roles and permissions.

It might be hard to visualize all the permissions in place. Let's break it down to keep things simple:

1️⃣ Root account: Permission to assume a role in the Staging account

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Action": "sts:AssumeRole",

// 👇 000000000000 represents the child AWS account

"Resource": "arn:aws:iam::000000000000:role/adm-role"

// 👆 'adm-role' the role that lives inside Staging account

}

]

}

If you prefer terraform, here's the creation of an IAM group that has the permissions mentioned above:

# 1️⃣ Set up some variables for organization

locals {

# ROOT account

group_name = "staging-developers"

policy_name = "staging-access"

iam_path = "/"

# PROJECT account

child_aws_account = "000000000000"

role_in_child_aws_account = "adm-role"

}

# 2️⃣ Create a group that can access staging

resource "aws_iam_group" "staging_group" {

name = local.group_name

path = local.iam_path

}

# 3️⃣ Defines what can be done on what/where

data "aws_iam_policy_document" "staging_access_spec" {

statement {

actions = [

"sts:AssumeRole", # 👈 You can "AssumeRole"

]

# 👇 Upon this resource (i.e. inside this AWS account, this role)

resources = ["arn:aws:iam::${local.child_aws_account}:role/${local.role_in_child_aws_account}"]

}

}

# 4️⃣ 👇 For the group we just created, attach the policy we just defined

resource "aws_iam_group_policy" "staging_group_policy" {

name = local.policy_name

group = aws_iam_group.staging_group.name

policy = data.aws_iam_policy_document.staging_access_spec.json

}

2️⃣ Staging account: adm-role permissions to resources and trusted relationships

Note: By default, AWS creates the exact definitions below when you create a child org.

Let's state that since it's staging, it's fine to be permissive. This role contains an admin policy granting access to everything.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "*",

"Resource": "*"

}

]

}

But we limit who can assume that role! The trusted entity allows just one specific AWS account to do that:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::000000000000:root" // 👈 Where 0... is the full account id

},

"Action": "sts:AssumeRole"

}

]

}

We can narrow down the permissions by having many roles in a production account and allowing only a subset of users to assume specific roles.

🛂 Accessing AWS child accounts from root account

It might sound that it would be extremely boring or slow to access such accounts. Turns out that once you understand how the permissions works (as explained above), it becomes simple. See:

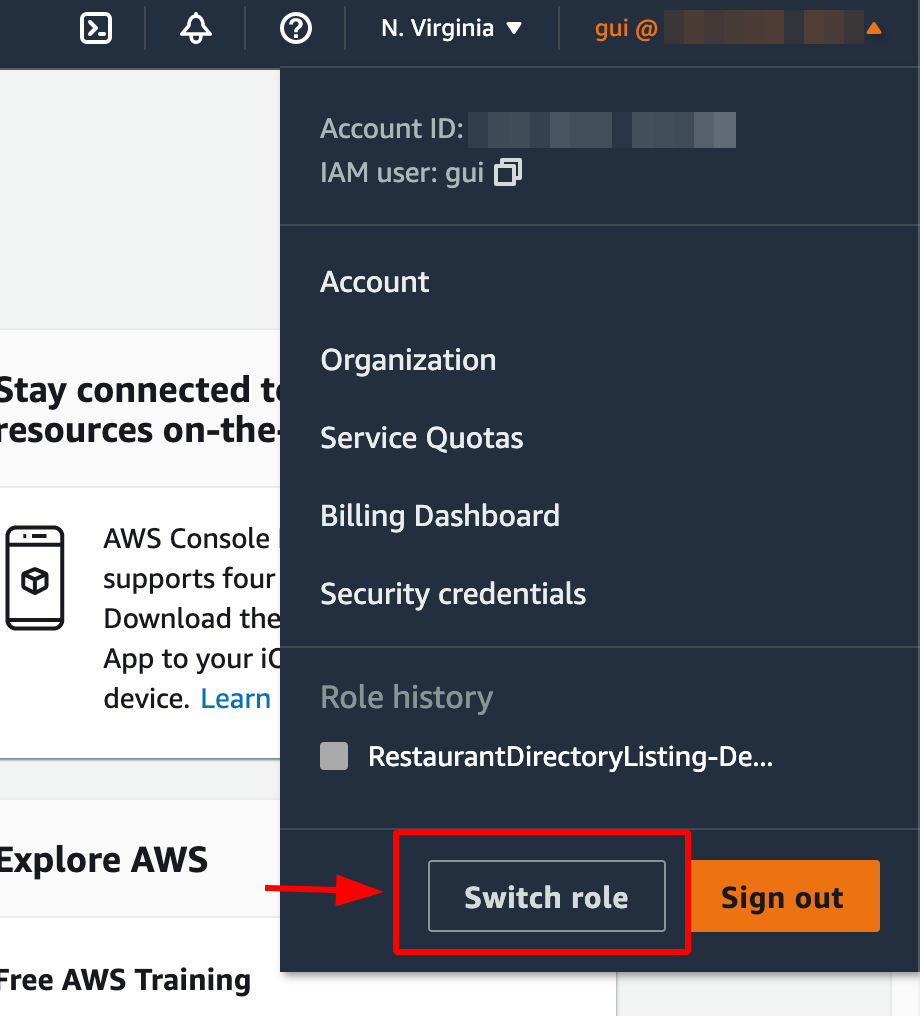

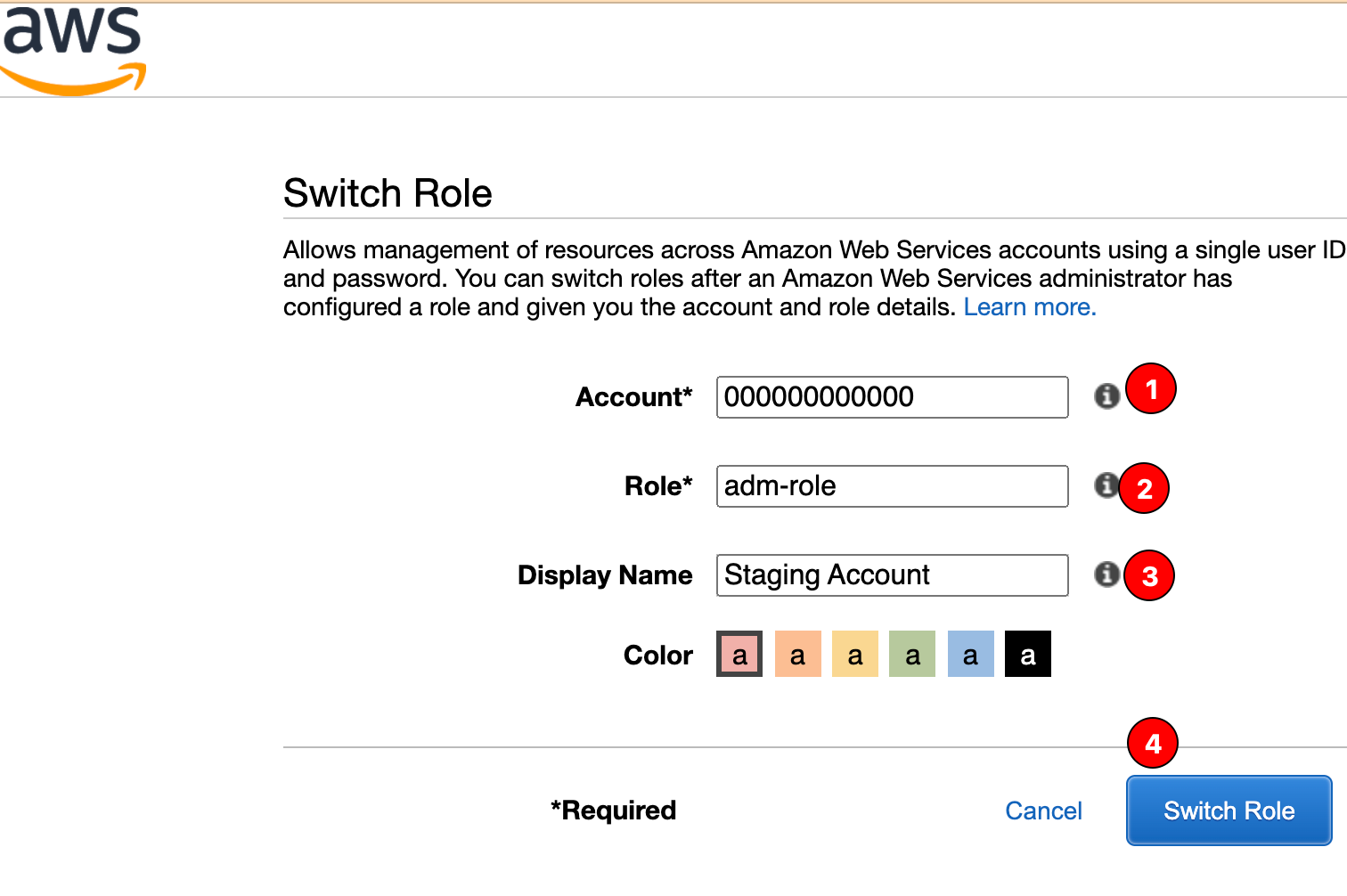

🪖 Set up AWS console to access child account

To do that, you need to be logged as an IAM user (i.e. you can't do this with a root user).

Then you're free to access children accounts by filling the form:

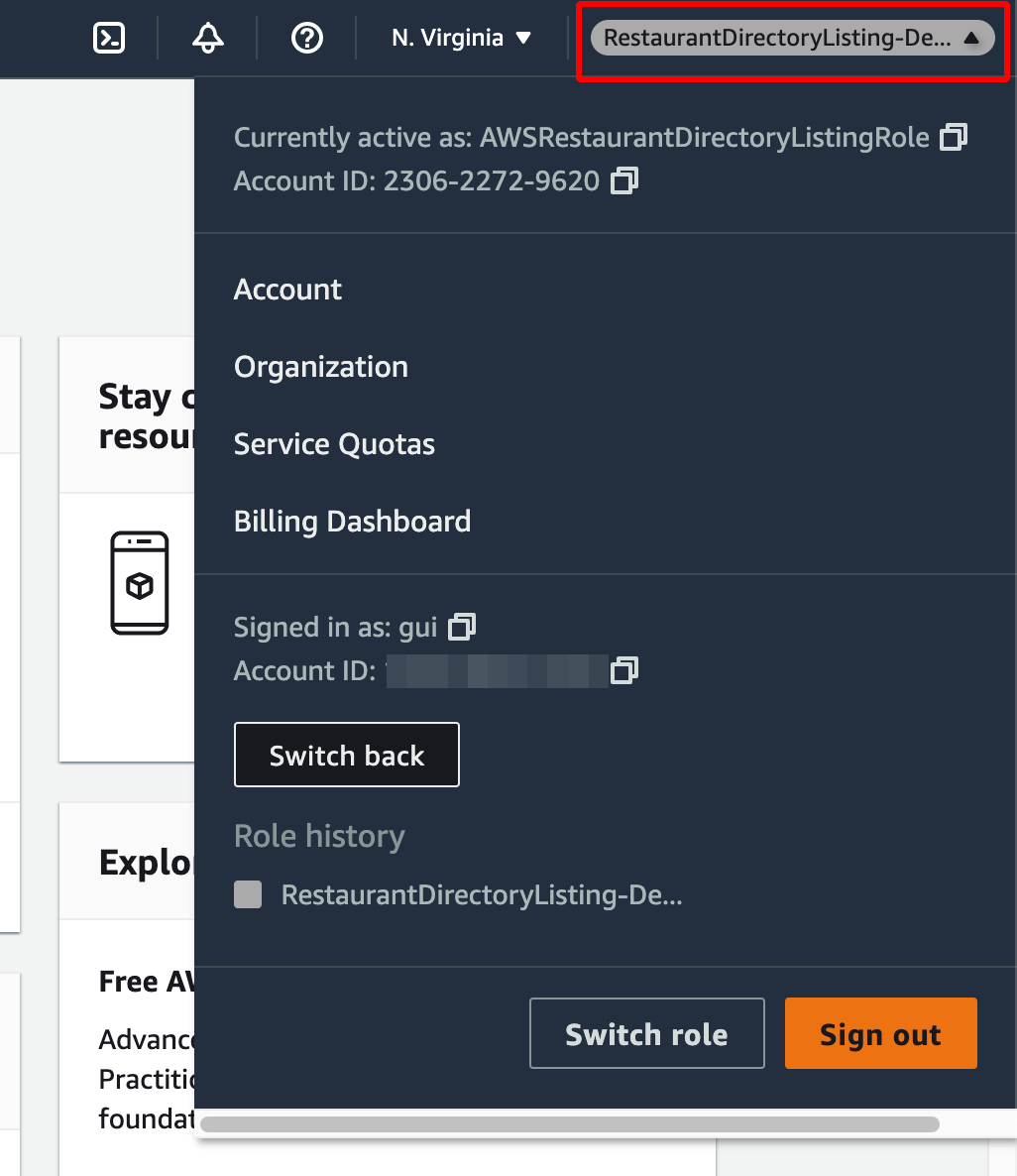

And finally, you must see the console again, but this time it's another account:

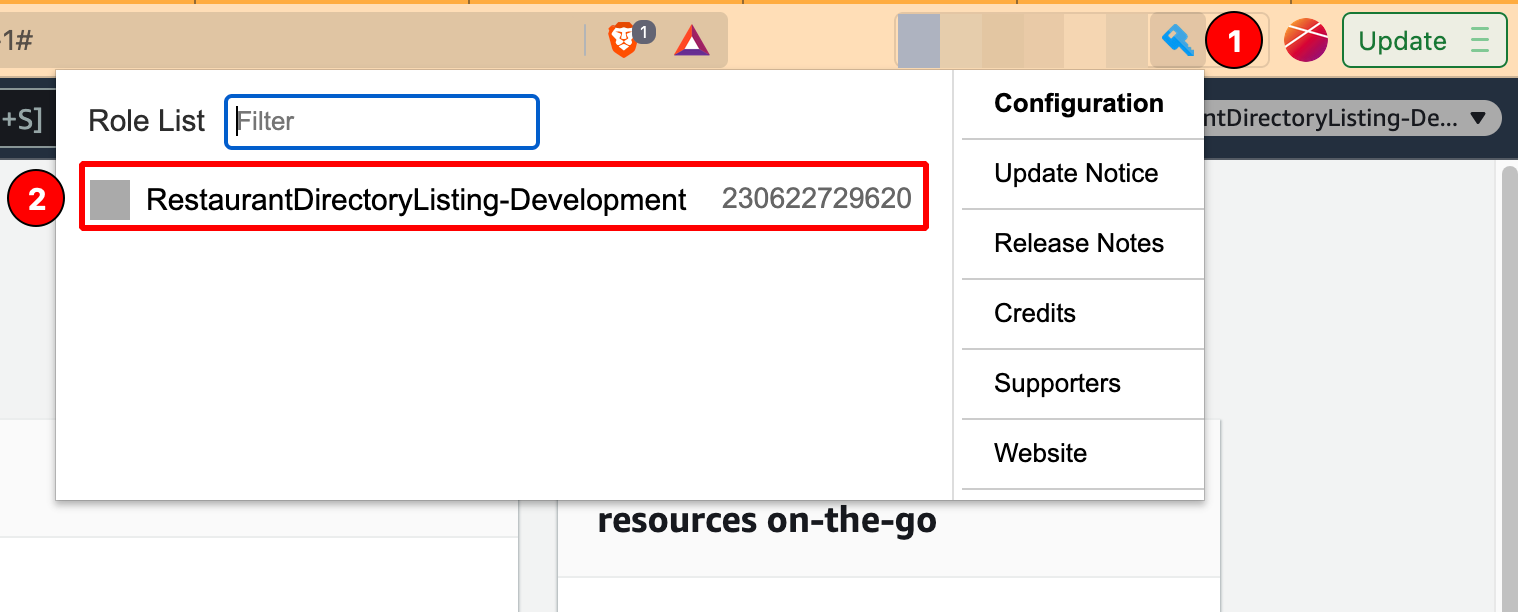

🪖🎖️ Pro Tip: Use an extension to skip the form

Even though the above method is easy, it's quite boring.

Instead, I recommend using the extension AWS Extend Switch Roles extension (available for Chrome, Firefox, and Edge).

It allows you to switch between roles easily, so you're always one click away to impersonate any organization:

The example configuration would be like this:

[Staging Account]

aws_account_id = 000000000000

role_name = admin-role

region = us-east-1

⛑️ Setup AWS CLI to assume the role

Accessing such accounts through the CLI is even easier, and no, you don't have to manually run aws sts assume-role.

Here is the setup for your .aws/credentials:

# 👇 Usual setup of a regular user

[root-account]

aws_access_key_id = AKIAXXXXXXXXXXXXXXXX

aws_secret_access_key = XXXXXXXXXXXXXXXX

# 👇 Define aws organization

[staging-account]

role_arn = arn:aws:iam::000000000000:role/admin-role # 👈 Which role to assume?

source_profile = root-account # 👈 Use the above profile to assume this role

So anytime you do export AWS_PROFILE=staging-account, your AWS CLI will automatically assume the role for you and give access to the resources you should have. Pretty cool uh?

Check it by yourself:

❯ export AWS_PROFILE=staging-account

❯ aws sts get-caller-identity

// Output

{

"UserId": "AROATLMRPSWKBES5PAFXV:botocore-session-1637767588",

"Account": "000000000000",

"Arn": "arn:aws:sts::000000000000:assumed-role/admin-role/botocore-session-1637767588"

}

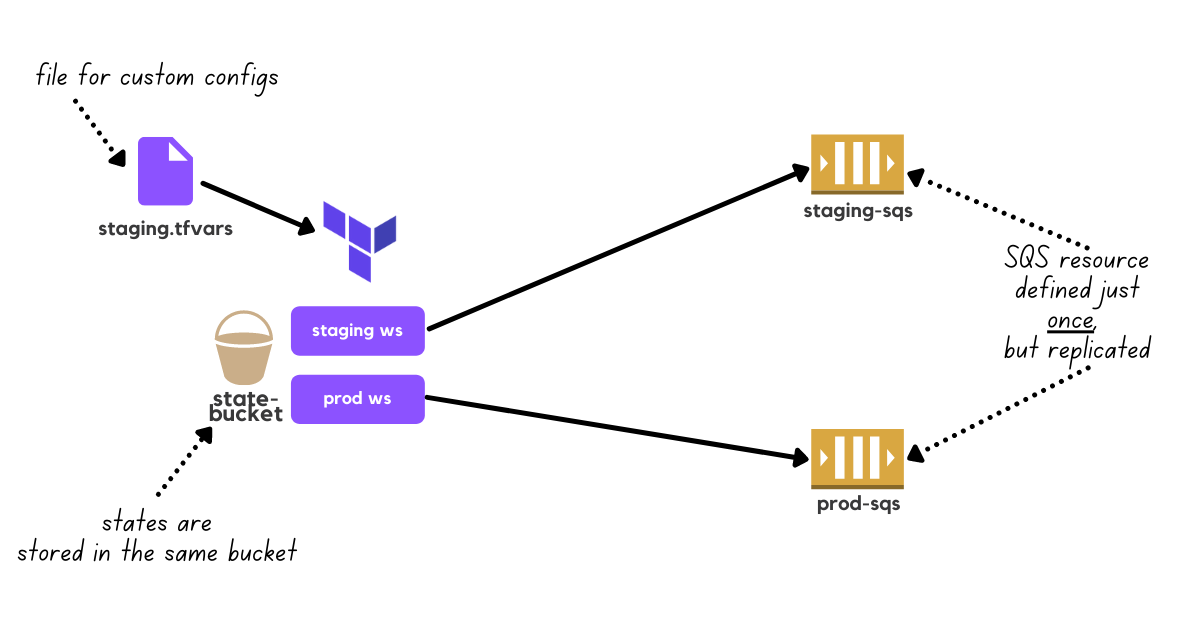

🪐 Replicating resources with Terraform workspaces

Since now you have different AWS accounts, you might wonder how it would work with Terraform. I have seen too many repetitions both inside AWS (resource naming hell) and within terraform (WET code). Let's start solving the resource replication first, and then we move to set up the AWS multi-org.

As an example, let's consider we want to have different SQS queues per environment, and for simplicity let's just stick to staging and production accounts.

So, the first thing that I see people doing is:

.

src

└── queues

├── main.tf # 👈 Resources defined here

├── vars.tf

└── state.tf # 👈 Terraform backend state config

# main.tf

# 👇 Defines we're using AWS cloud provider

provider "aws" {

region = "us-east-1"

}

# 👇 Defines SQS for staging

module "staging_sqs" {

source = "terraform-aws-modules/sqs/aws"

version = "~> 2.0"

# 👇 Naming hell, we add a prefix to specify the var

name = var.staging_sqs_name

message_retention_seconds = 86400 # 👈 Staging messages set to 1day

# 👇 We tag the resource with the env

tags = {

env = "staging"

}

}

# 👇 Defines SQS for prod

module "prod_sqs" {

source = "terraform-aws-modules/sqs/aws"

version = "~> 2.0"

# 👇 Naming hell, we add a prefix to specify the var

name = var.prod_sqs_name

message_retention_seconds = 259200 # 👈 Prod messages set to 3days

# 👇 We tag the resource with the env

tags = {

env = "prod"

}

}

Three things to keep in mind:

- We don't want to copy/paste code to represent the same resource per environment, that sucks

- Different environments have different resource configurations (e.g. Staging databases can be way smaller than production ones), and for our example, the SQS queues have different tags and message retention time

- We need to create them in different AWS accounts but associated with the root account

That's where terraform workspaces shine! You can have the same definition of resources with different states.

If you never tried I recommend you to play with it by running:

❯ terraform workspace list

* default

❯ terraform workspace new staging

Created and switched to workspace "staging"!

You're now on a new, empty workspace. Workspaces isolate their state,

so if you run "terraform plan" Terraform will not see any existing state

for this configuration.

❯ terraform workspace list

default

* staging

Consider that every workspace has an independent state, and you can switch between workspaces by terraform workspace select <workspace-name>.

Considering you created two workspaces named staging and prod, we need to modify our directory structure a bit to keep different configs and update our main.tf file:

.

src

└── queues

├── workspaces # 👈 New directory

│ ├── prod.tfvars # 👈 I recommend naming it after the workspace to keep it obvious

│ └── staging.tfvars

├── main.tf

├── vars.tf

└── state.tf

And now our main.tf can be updated to be like:

provider "aws" {

region = "us-east-1"

}

module "sqs" { # 👈 Cleaner name

source = "terraform-aws-modules/sqs/aws"

version = "~> 2.0"

name = "${terraform.workspace}-sqs" # 👈 Keeping the same structure for example

message_retention_seconds = var.message_retention_seconds

# 👇 We tag the resource with the env

tags = {

env = terraform.workspace

}

}

Way better right? The biggest difference is the directory structure, where we included two new files prod.tfvars and staging.tfvars.

These files are quite simple though, see staging.tfvars:

# staging.tfvars

message_retention_seconds = 86400

and prod.tfvars:

# prod.tfvars

message_retention_seconds = 259200

And finally, the expected usage would be, for example:

❯ terraform workspace select staging

Switched to workspace "staging".

# 👇 Now you must use `-var-file`

terraform plan -var-file ./workspaces/staging.tfvars

terraform apply -var-file ./workspaces/staging.tfvars

Here's how the state is stored inside an S3 bucket:

🏗🪐 Terraform with AWS Organizations (multi-accounts)

That's way better already, but we still have resources located in the same account, let's fix that by telling Terraform to use different accounts for managing the resources.

Given that you have a nice and reusable directory structure. We just need to modify three places:

main.tfwith theassume_roleoptionprod.tfvars, andstaging.tfvarswith respective AWS config vars

# main.tf

provider "aws" {

region = "us-east-1"

# 👇 Identifies which role terraform should assume when planning and applying resources

assume_role {

role_arn = var.aws_role

# 👆 We can keep different vars per environment!

}

}

...

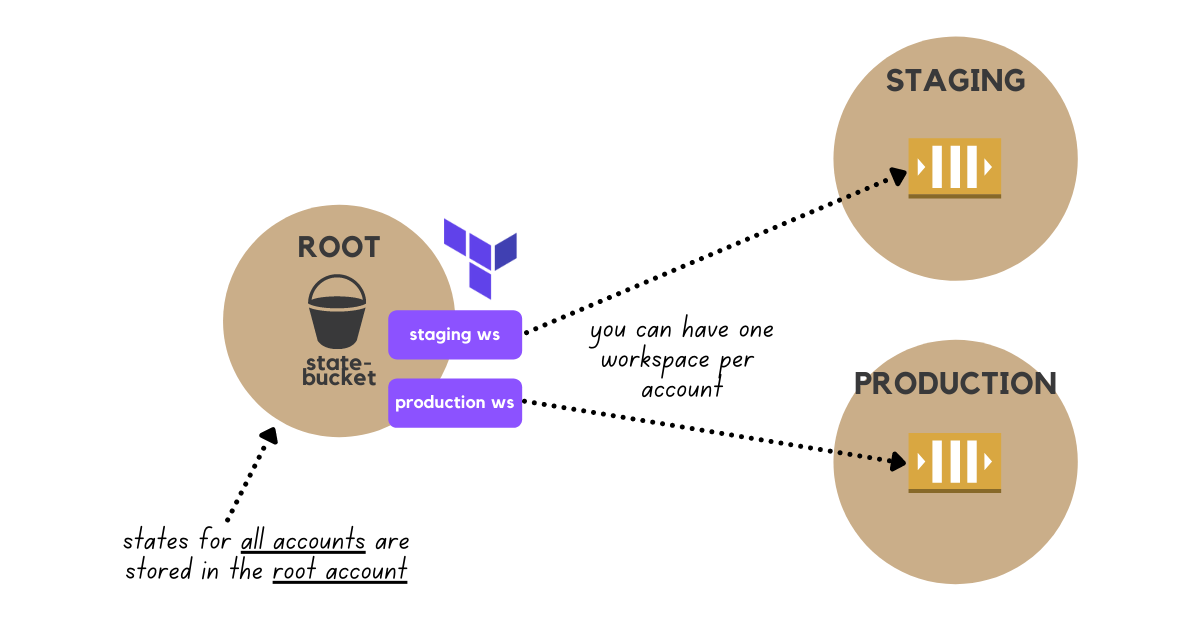

Keep in mind that doing it means terraform still stores the state in a bucket located in the root account, but any interaction with the cloud resources will assume a new role before.

Of course, you can set the aws_role var per environment:

# staging.tfvars

aws_role = "arn:aws:iam::00000000000:role/admin-role"

# prod.tfvars

aws_role = "arn:aws:iam::00000000000:role/admin-role"

✨ And that's it! Now you have reusable terraform code spread across environments, and resources well named. Cheers! 🍻

👀 Want to see a real project?

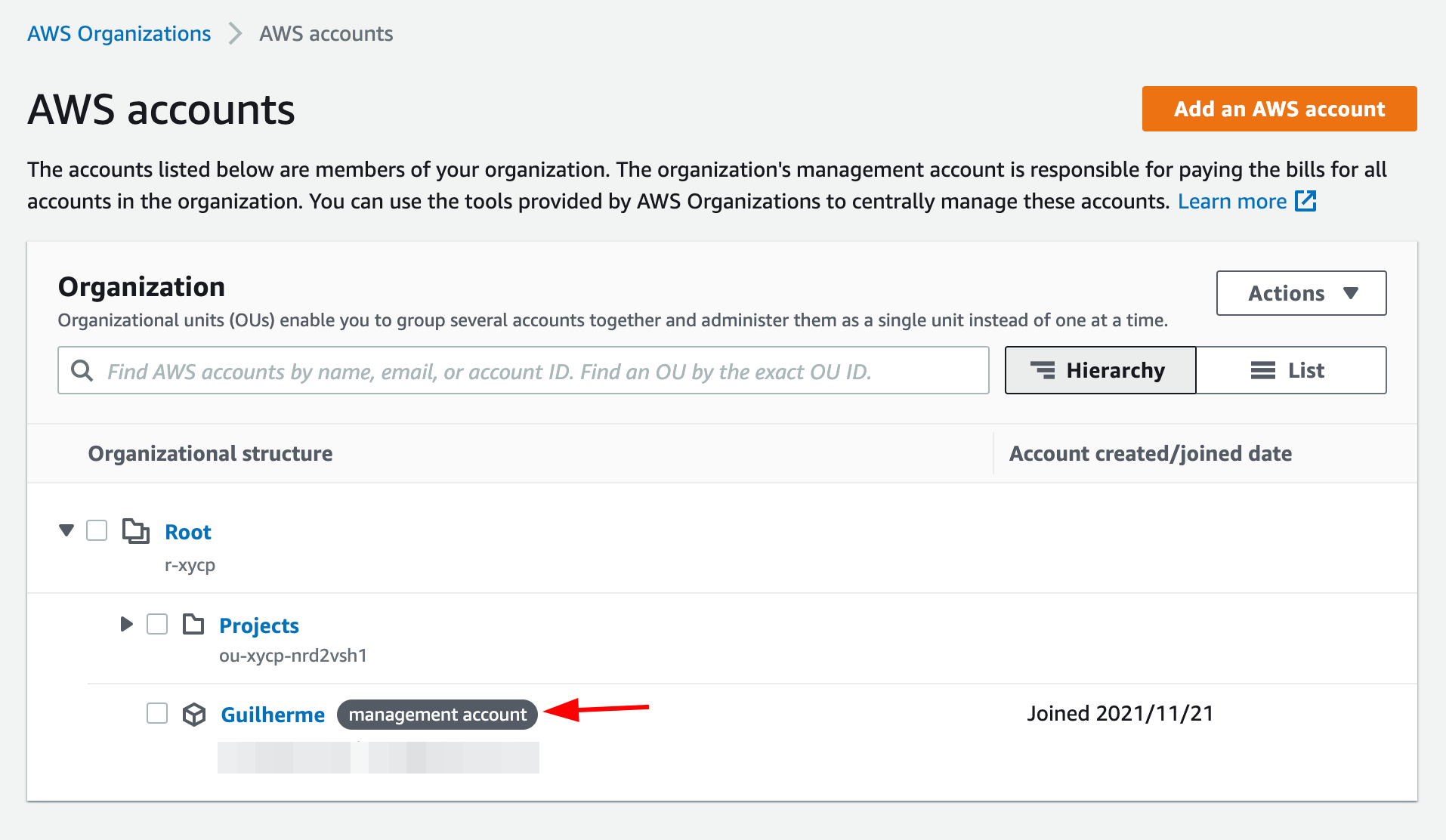

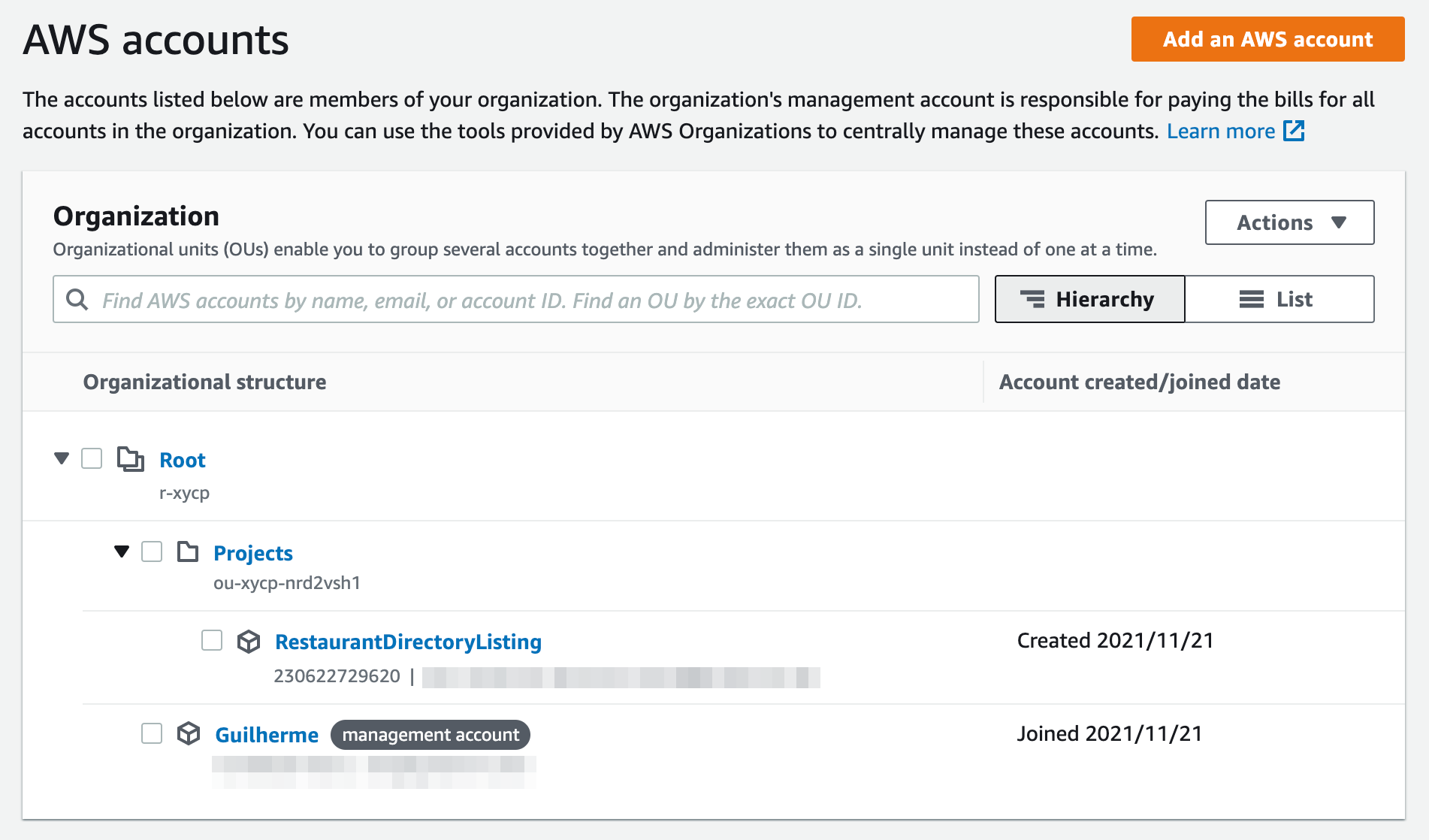

I'm building a microservice architecture in public in a new series called the AntifragileDev while keeping the whole code open source and sharing my journey and learnings as I go.

You probably saw some references from my new project in the AWS org examples above, right? 😁 I'll share everything (including costs) of keeping a microservice architecture up and running.

If that's something you're interested in, you should follow me on Twitter.

Did you know you can create multiple AWS accounts for better management?

— Gui Latrova (@guilatrova) November 23, 2021

This feature is called "AWS organizations".

The root account will be in charge of paying for the usage of children accounts.

👇🧵 Thread pic.twitter.com/AjXPdsGlgG

This post is part of this project, where I bring real needs, build all microservices in public, and keep them open source.

👇 And by the way, this is the infrastructure project I meant, feel free to explore!